Foiset Cartucce hp 304 Nero e Colore Sostituzione per Cartuccia hp 304 Nero per HP Envy 5010 5020 5030 5032 Deskjet Envy 2620 2630 3720 3730 3750 3760 2622 2632 2633 2634 3733 3735 AMP 100 : Amazon.it: Informatica

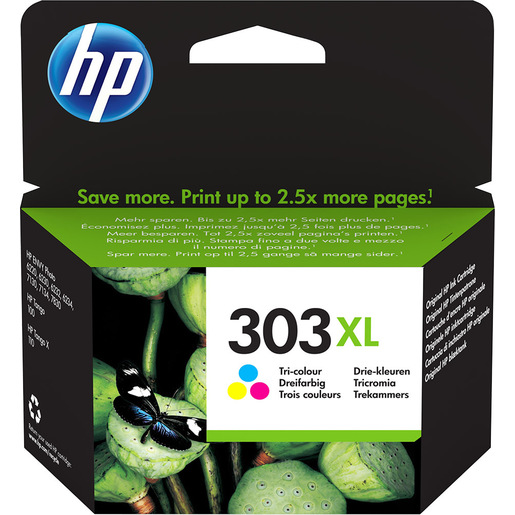

HP Cartuccia di inchiostro in tricromia originale ad alta capacità 303XL | Cartucce, Toner e Consumabili in offerta su Unieuro

HP 304 Nero, N9K06AE, Cartuccia Originale HP da 120 Pagine, Compatibile con Stampanti HP DeskJet 2620, 2630, 3720, 3730, 3750 e 3760, HP ENVY 5010, 5020 e 5030 : Amazon.it: Informatica

HP 303XL Nero, T6N04AE, Cartuccia Originale HP, ad Alta Capacità, Compatibile con Stampanti HP Tango e Tango X e HP Envy 6220, 6230, 6232, 6234, 7130, 7134 e 7830 : Hp: Amazon.it: Informatica

HP Confezione da 4 cartucce originali di inchiostro nero/ciano/magenta/giallo 364 | Cartucce, Toner e Consumabili in offerta su Unieuro

300 XL Sostituzione per Cartucce HP 300 xl Nero e Colore Cartuccia HP 300 Compatibile con PhotoSmart C4600 C4680 C4780 C4783 (1 Nero, 1 Tricromia) : Amazon.it: Informatica